GPT-4o vs Gemini 1.5 Pro: AI Model Comparison

Explore the key differences between OpenAI and Google's latest language models

GPT-4o

: specialties & advantages

GPT-4o is OpenAI's high-intelligence flagship model, designed for complex, multi-step tasks. It offers advanced capabilities and improved performance over previous models.

Key strengths include:

- Multimodal capabilities (text and vision)

- High intelligence and advanced reasoning abilities

- Superior performance across non-English languages

- Faster text generation (2x faster than GPT-4 Turbo)

- Improved efficiency and lower cost compared to GPT-4 Turbo

- Large context window of 128K tokens

GPT-4o is particularly well-suited for applications requiring sophisticated analysis, creative problem-solving, and handling of complex information across multiple modalities.

Best use cases for

GPT-4o

Here are examples of ways to take advantage of its greatest stengths:

Complex Data Analysis

GPT-4o's advanced reasoning capabilities make it ideal for analyzing complex datasets and providing in-depth insights across various domains.

Multilingual and Multimodal Applications

With superior performance in non-English languages and multimodal inputs, GPT-4o excels in applications requiring diverse language processing and image understanding.

High-Stakes Decision Support

GPT-4o's high intelligence and advanced reasoning make it suitable for supporting critical decision-making processes in fields like finance, healthcare, and strategic planning.

Gemini 1.5 Pro

: specialties & advantages

Gemini 1.5 Pro is Google's advanced language model, designed for complex, multi-step tasks. It offers improved capabilities over previous models.

Key strengths include:

- Multimodal capabilities (text and vision)

- Massive context window of 1 million tokens

- Advanced reasoning and problem-solving abilities

- Improved accuracy in complex tasks

- Enhanced performance in specialized domains

- Support for up to 8,192 output tokens per request

- Knowledge cutoff up to November 2023

Gemini 1.5 Pro is particularly well-suited for applications requiring sophisticated analysis, creative problem-solving, and handling of complex information across multiple modalities.

Best use cases for

Gemini 1.5 Pro

On the other hand, here's what you can build with this LLM:

Advanced Data Analysis

Gemini 1.5 Pro's large context window and advanced reasoning capabilities make it ideal for analyzing complex datasets and providing in-depth insights.

Long-Form Content Generation

With its extensive context window, Gemini 1.5 Pro excels at generating coherent long-form content, maintaining context and consistency throughout.

Complex Problem-Solving

Gemini 1.5 Pro's advanced reasoning capabilities make it suitable for tackling complex, multi-step problems in fields like scientific research and strategic planning.

In summary

When comparing GPT-4o and Gemini 1.5 Pro, several key differences emerge:

- Context Window: Gemini 1.5 Pro offers a much larger context window (1 million tokens) compared to GPT-4o (128K tokens), allowing for processing of significantly larger data volumes.

- Performance: Both models perform similarly on various benchmarks, with GPT-4o showing a slight edge in some areas like MMLU (88.7% vs 81.9% for 5-shot) and MMMU (59.4% vs 58.5%).

- Cost: GPT-4o is more cost-effective, with input costs at $7.50 per million tokens (blended 3:1) compared to Gemini 1.5 Pro's $7.00 for input and $21.00 for output per million tokens.

- Speed: GPT-4o has a faster output speed of 86.8 tokens per second compared to Gemini 1.5 Pro's 163.6 tokens per second.

- Latency: GPT-4o has lower latency with a Time to First Token (TTFT) of 0.45 seconds, while Gemini 1.5 Pro has a TTFT of 1.06 seconds.

- Maximum Output: Gemini 1.5 Pro is limited to 8,192 tokens per request, while GPT-4o's maximum output is not specified but likely higher.

- Release Date: Gemini 1.5 Pro was released earlier (February 15, 2024) compared to GPT-4o (July 18, 2024).

- Knowledge Cutoff: Gemini 1.5 Pro has slightly more recent training data (November 2023) compared to GPT-4o (October 2023).

For most applications requiring advanced reasoning and multimodal inputs, both models offer compelling options. GPT-4o may be preferable for tasks requiring lower latency, slightly higher performance on certain benchmarks, or cost-effectiveness for larger volumes. Gemini 1.5 Pro might be better suited for applications needing to process extremely large contexts or generate faster outputs.

Use Licode to build products out of custom AI models

Build your own apps with our out-of-the-box AI-focused features, like monetization, custom models, interface building, automations, and more!

Enable AI in your app

Licode comes with built-in AI infrastructure that allows you to easily craft a prompt, and use any Large Lanaguage Model (LLM) like Google Gemini, OpenAI GPTs, and Anthropic Claude.

Supply knowledge to your model

Licode's built-in RAG (Retrieval-Augmented Generation) system helps your models understand a vast amount of knowledge with minimal resource usage.

Build your AI app's interface

Licode offers a library of pre-built UI components from text & images to form inputs, charts, tables, and AI interactions. Ship your AI-powered app with a great UI fast.

Authenticate and manage users

Launch your AI-powered app with sign-up and log in pages out of the box. Set private pages for authenticated users only.

Monetize your app

Licode provides a built-in Subscriptions and AI Credits billing system. Create different subscription plans and set the amount of credits you want to charge for AI Usage.

Accept payments with Stripe

Licode makes it easy for you to integrate Stripe in your app. Start earning and grow revenue for your business.

Create custom actions

Give your app logic with Licode Actions. Perform database operations, AI interactions, and third-party integrations.

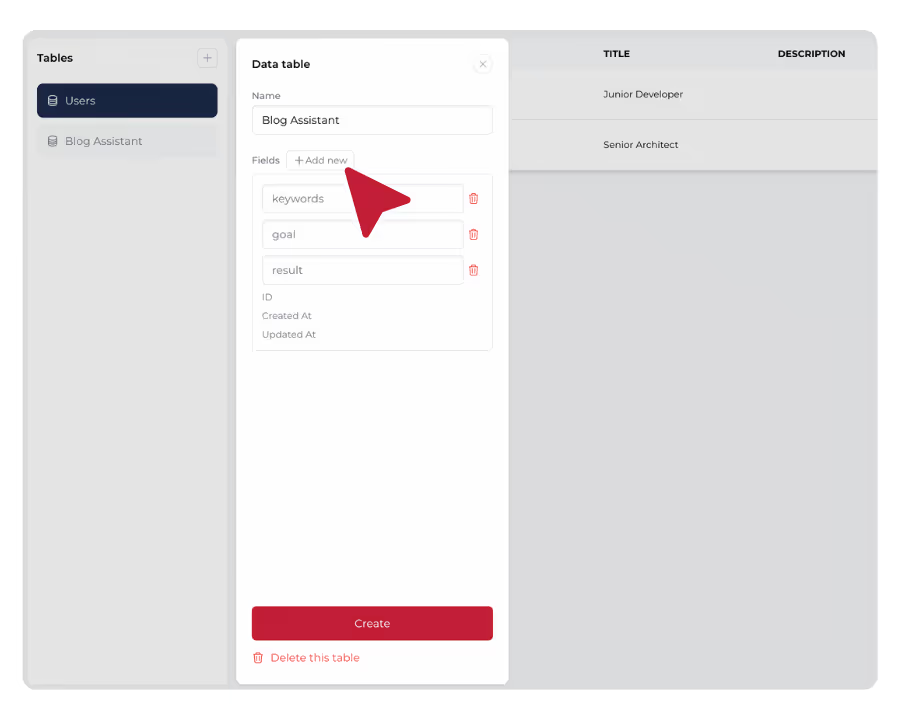

Store data in the database

Simply create data tables in a secure Licode database. Empower your AI app with data. Save data easily without any hassle.

Publish and launch

Just one click and your AI app will be online for all devices. Share it with your team, clients or customers. Update and iterate easily.

Browse our templates

StrawberryGPT

StrawberryGPT is an AI-powered letter counter that can tell you the correct number of "r" occurrences in "Strawberry".

AI Tweet Generator

An AI tool to help your audience generate a compelling Twitter / X post. Try it out!

YouTube Summarizer

An AI-powered app that summarizes YouTube videos and produces content such as a blog, summary, or FAQ.

Don't take our word for it

I've built with various AI tools and have found Licode to be the most efficient and user-friendly solution. In a world where only 51% of women currently integrate AI into their professional lives, Licode has empowered me to create innovative tools in record time that are transforming the workplace experience for women across Australia.

Licode has made building micro tools like my YouTube Summarizer incredibly easy. I've seen a huge boost in user engagement and conversions since launching it. I don't have to worry about my dev resource and any backend hassle.

Other comparisons

FAQ

What are the main differences in capabilities between GPT-4o and Gemini 1.5 Pro?

The main differences in capabilities between GPT-4o and Gemini 1.5 Pro are:

- Context Window: Gemini 1.5 Pro has a much larger context window (1 million tokens) compared to GPT-4o (128K tokens).

- Performance: Both models perform similarly on benchmarks, with GPT-4o showing a slight edge in some areas like MMLU and MMMU.

- Speed: Gemini 1.5 Pro has a faster output speed (163.6 tokens/s) compared to GPT-4o (86.8 tokens/s).

- Latency: GPT-4o has lower latency with a TTFT of 0.45s, while Gemini 1.5 Pro has a TTFT of 1.06s.

- Maximum Output: Gemini 1.5 Pro is limited to 8,192 tokens per request, while GPT-4o's maximum output is likely higher but not specified.

- Knowledge Cutoff: Gemini 1.5 Pro has slightly more recent training data (November 2023) compared to GPT-4o (October 2023).

Which model is more cost-effective for general-purpose tasks?

The cost-effectiveness of GPT-4o and Gemini 1.5 Pro depends on the specific use case:

- GPT-4o costs $7.50 per million tokens (blended 3:1 input/output ratio).

- Gemini 1.5 Pro costs $7.00 per million input tokens and $21.00 per million output tokens.

- For tasks with a high proportion of output tokens, GPT-4o may be more cost-effective.

- For tasks primarily involving input processing or with a low output-to-input ratio, Gemini 1.5 Pro might be more economical.

- Consider the balance between cost and the specific capabilities required for your task, such as context window size and performance on relevant benchmarks.

How do the models compare in terms of performance benchmarks?

GPT-4o and Gemini 1.5 Pro perform similarly on various benchmarks, with GPT-4o showing a slight edge in some areas:

- MMLU (Massive Multitask Language Understanding): GPT-4o scores 88.7% (5-shot) compared to Gemini 1.5 Pro's 81.9% (5-shot).

- MMMU (Massive Multitask Multimodal Understanding): GPT-4o scores 59.4%, while Gemini 1.5 Pro scores 58.5% (0-shot).

- HellaSwag: Gemini 1.5 Pro achieves 93.3% (10-shot), while this benchmark is not available for GPT-4o.

- HumanEval: GPT-4o scores slightly higher with 87.2% (0-shot) compared to Gemini 1.5 Pro's 84.1% (0-shot).

- MATH: GPT-4o scores 70.2% (0-shot), higher than Gemini 1.5 Pro's 67.7% (4-shot Minerva Prompt).

These benchmarks suggest that both models perform well across various language understanding, reasoning, and knowledge-based tasks, with GPT-4o showing a slight edge in some areas.

What are the key factors to consider when choosing between GPT-4o and Gemini 1.5 Pro for a project?

When choosing between GPT-4o and Gemini 1.5 Pro for a project, consider the following factors:

- Context Length: If your project requires processing very large documents or extensive conversation histories, Gemini 1.5 Pro's larger context window (1M tokens) may be advantageous.

- Speed Requirements: For applications needing extremely fast output, Gemini 1.5 Pro's higher token generation speed may be preferable.

- Latency Sensitivity: If your application requires very low latency for the first response, GPT-4o's lower TTFT might be more suitable.

- Budget: Consider the pricing structure of both models in relation to your expected usage and the balance of input to output tokens in your application.

- Performance Requirements: Consider the slight performance edge of GPT-4o on certain benchmarks if your application aligns with these tasks.

- Maximum Output Length: If your application needs to generate longer responses in a single request, GPT-4o might be beneficial (though exact limits are not specified).

- Recency of Knowledge: If up-to-date information is crucial, consider the slight difference in knowledge cutoff dates.

- API Integration: Consider the ease of integration with your existing infrastructure and the specific API features offered by OpenAI (for GPT-4o) or Google (for Gemini 1.5 Pro).

Evaluate these factors based on your project's specific requirements, balancing the need for advanced capabilities with cost-effectiveness, speed, and scalability.

How many AI models can I build on my app?

You can build as many models as you want!

Licode places no limits on the number of models you can create, allowing you the freedom to design, experiment, and refine as many data models or AI-powered applications as your project requires.

Which LLMs can we use with Licode?

Licode currently supports integration with seven leading large language models (LLMs), giving you flexibility based on your needs:

- OpenAI: GPT 3.5 Turbo, GPT 4o Mini, GPT 4o

- Google: Gemini 1.5 Pro, Gemini 1.5 Flash

- Anthropic: Claude 3 Sonnet, Claude 3 Haiku

These LLMs cover a broad range of capabilities, from natural language understanding and generation to more advanced conversational AI. Depending on the complexity of your project, you can choose the right LLM to power your AI app. This wide selection ensures that Licode can support everything from basic text generation to advanced, domain-specific tasks such as image and code generation.

Do I need any technical skills to use Licode?

Not at all! Our platform is built for non-technical users.

The drag-and-drop interface makes it easy to build and customize your AI tool, including its back-end logic, without coding.

Can I use my own branding?

Yes! Licode allows you to fully white-label your AI tool with your logo, colors, and brand identity.

Is Licode free to use?

Yes, Licode offers a free plan that allows you to build and publish your app without any initial cost.

This is perfect for startups, hobbyists, or developers who want to explore the platform without a financial commitment.

Some advanced features require a paid subscription, starting at just $20 per month.

The paid plan unlocks additional functionalities such as publishing your app on a custom domain, utilizing premium large language models (LLMs) for more powerful AI capabilities, and accessing the AI Playground—a feature where you can experiment with different AI models and custom prompts.

How do I get started with Licode?

Getting started with Licode is easy, even if you're not a technical expert.

Simply click on this link to access the Licode studio, where you can start building your app.

You can choose to create a new app either from scratch or by using a pre-designed template, which speeds up development.

Licode’s intuitive No Code interface allows you to build and customize AI apps without writing a single line of code. Whether you're building for business, education, or creative projects, Licode makes AI app development accessible to everyone.